The key to calculating battery life in the IoT

As Cliff Ortmeyer, Global Head of Solutions Development, Premier Farnell explains, the internet has dramatically changed the way we design most electronic systems, with everything from signage at a bus stop, to complex industrial systems now using connectivity as a key part of their functionality. Perhaps the biggest change, however, is the introduction of sensor systems that collect data and pass the information to the cloud.

These small ‘things’ often do not have access to mains power. This means that they must have a means of powering themselves, something that is achieved using either batteries or energy harvesting.

For many applications, energy harvesting offers the most promising solution, offering the prospect of indefinite operation if the device can be designed to use less power than is available through energy harvesting.

However, many applications are not suitable for this approach because of limited energy availability or excessive system power demands. In such cases, batteries are needed to power the system.

Unfortunately, batteries will need changing at some point. With the cost of replacing batteries often higher than the cost of the IoT device itself, estimating the battery lifetime becomes critical.

Factors affecting battery life

The battery life of an IoT device is determined by a simple calculation - the battery capacity divided by the average rate of discharge. Minimising the energy used by the device or increasing the battery capacity will increase the lifetime of the battery and reduce the total cost of ownership of the product.

As batteries are often the largest part of an IoT sensor system, engineers often have a limited choice of which one to use. With a wide range of processors, communications technologies and software algorithms, however, the system can be designed to achieve the required lifetime.

IOT processor sleep modes

Processors designed for IoT applications offer a variety of ultra-low power sleep modes. Consider a device such as the TI CC2650MODA wireless microcontroller, for example. Figure 1 (below) shows the current consumed when the device is operating in different states. The power consumption varies by six orders of magnitude from shutdown to active operation.

.jpg)

Unless sampling of the data is very infrequent, shutting down the processor offers few advantages. Additional circuitry and code will be needed to restart, adding to cost and complexity. Furthermore, the standby modes consume less than 3µA, a level that would take at least eight years to discharge the battery - longer than the lifetime of many IoT devices and as much as the shelf life of a CR2032 battery. Hence there is usually little benefit in shutting down the processor completely.

Selecting the appropriate standby mode can be important. The lowest power standby mode consumes around one third of the current of the highest power option, but critically very little of the processor state is saved. Although some IoT applications will need to select the lowest power sleep modes, many will choose to preserve the cache to minimise the number of cycles required to perform the processing required in active mode.

Processing in active mode is a trade-off. Figure 1 shows that power consumption increases linearly with clock frequency due to the CMOS technology used for this type of IoT processor. Hence faster clock speeds might seem to equate with shorter battery life, but as there is a ‘base’ current of 1.45mA, the shorter wake time required to run the same algorithm at faster clock speeds can mean that slowing the clock is a false economy and actually reduces battery life.

There is also a finite wake time to switch from one mode to another - 151µs for the CC2650MODA to switch from standby to active, for example. At the maximum clock frequency of 48MHz, this means that power is consumed for more than 7,000 clock cycles as the processor wakes. For applications where only a small amount of code is needed, slowing down the clock to trade a longer code execution time for lower power during wake-up will extend the battery life. Equally, minimising the number of wake operations and performing as many tasks as possible before returning to standby can also increase battery life.

Modern IoT devices are very complex products that integrate many peripherals to allow a single-chip solution to satisfy diverse requirements. Frequently, however, IoT devices – particularly simple sensors – do not need this degree of functionality.

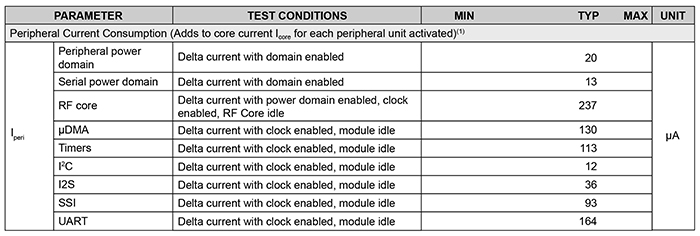

Figure 2 (below) shows the power consumption of the peripherals available in the TI CC2650MODA family. Although the current consumed by the various devices is very small - of the order of tens or low hundreds of microamperes - disabling them can have a significant impact. If no serial connectivity is required, a total of 318µA can be saved. Although this may not seem much, this current will have a significant impact on battery life.

IoT comms technologies

Choosing the right communications technology is often determined by the system requirements. For battery powered IoT systems, this almost always means using an RF link. For wireless communications, increased range or higher data rate will typically demand higher power consumption, and therefore the lowest-power communications technology that will meet these demands is often the obvious choice.

For IoT sensors there are several popular technologies. LoRa, for example, offers the capability to build a low-power, long-range WAN with a range of several kilometres, while Bluetooth Low Energy (BLE) only communicates over short distances, but consumes significantly less current. Another decision that must be made is whether to use an on-chip device, or to select a separate chip to handle communications.

Managing the communications interface is critical, as even low power communications technologies will drain a battery very quickly, and often the processing requirement is higher than that of the RF stage.

To maximise the battery capacity devoted to communications, many IoT systems will only wake the communications circuits when they have sufficient data to make transmission worthwhile.

Sensor selection

Sensors can have a significant impact on the battery life of an IoT system. Resistance temperature detectors and thermistors, for example, change their resistance with temperature. A simple application where accuracy is not important might use a voltage divider, but a high precision system will need a current source, which will require more power. For many applications, integrated temperature sensors such as the TI LM35DZ are a good solution - this device is accurate to ±0.25°C at room temperature and draws only 60µA. Whichever sensor is chosen - it is critical that it draws power only when being used.

Battery technologies for IoT

One problem with the selection of batteries is the limited amount of data that is available for many of them. Other than the physical dimensions and output voltage, often the only other parameter specified is capacity. The battery capacity is obviously critical, as this determines the total energy available for an IoT device.

Battery quality has a significant impact on capacity. Simply specifying a particular type risks acquiring a cheaper device with low capacity, which in turn will reduce the battery life of the IoT application and incur expensive battery replacement costs. There may also be batteries with different chemistries available in a particular form factor - using a different chemistry can have a dramatic impact on battery life.

With the brief datasheets supplied for many batteries, it is tempting to assume that batteries are very simple devices and that the capacity of the battery is fixed, but in practice this is simply not true. For example, if the load demands more current, the lifetime is reduced dramatically. More importantly for some applications, as the temperature falls, the capacity of the battery drops considerably.

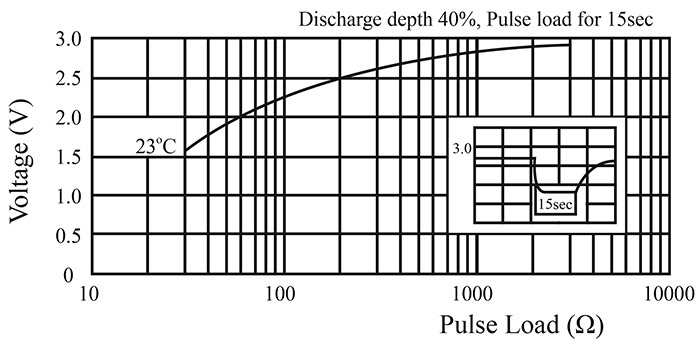

IoT applications draw current in pulses. The processor and sensor could draw several milliamperes for a short burst and then switch into a low power mode for a long period of time. Drawing current in pulses causes the output voltage to drop. Figure 3 (below) shows that even a 2mA pulsed load will cause the output of a CR2032 to fall from 3V to around 2.2V.

Battery shelf life is often ignored by engineers on the assumption that it relates to storing, rather than using, the battery. IoT applications, however, often need to operate for years from a single battery, making shelf life a critical factor. Most batteries offer a quoted shelf life of only seven or eight years.

Conclusion

Developing an IoT device that can operate from a battery requires careful engineering. Although the choice of components is important, bad design decisions can swamp the benefit of a lower power processor. The key to achieving good battery life is to ensure that the processor is in a low power standby mode as much as possible, and that the use of wireless communications is minimised.

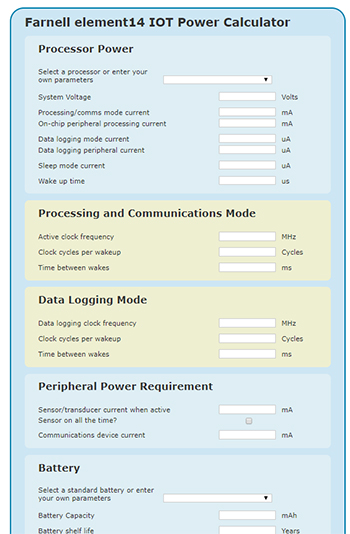

In this context, Farnell element14 has developed a calculator that allows users to estimate the battery life of an IoT system quickly and easily (Figure 4 - above).

Users just enter the relevant parameters for their processor, communications device, sensor and battery as well as key details on software operation, and the calculator then estimates the battery life of the IoT design.